There is an argument that modern day IT engineers really don’t need to know anything about electronics to do their job. After all, when things break we tend to isolate the part in question and replace it – and I have to admit that I can’t remember the last time I needed to warm up the iron in anger for IT related repairs.

Sure, I’ve knocked together some things recently, like my own custom POE door intercom, and the beginnings of an arcade cabinet controller, but generally speaking for grown up IT production work the red light comes on, you pull out the module slap in a new one and send the old one off to get it replaced under warranty.

So, when our 6 year old Stonefly SAN Array failed, with a POST message advising PCIe Training Error, I absent-mindedly reached to the shelf for a replacement RAID controller whilst planning what I was going to have for tea. Then I realised that I didn’t have one…. no problem I thought, there is nothing important left on the array. but I was wrong – very wrong. In the rush to decommission it over the past few weeks I’d left a virtual machine on it that was doing some key file services, and the failure had happened before the nightly backups would have completed…. suddenly I wasn’t hungry anymore… and pulled my chair up to the KVM in barely subdued panic.

The Fault suggested that the RAID controller on the storage concentrator (a Dell Poweredge 1950) was the issue. The storage concentrator connects via 4GB Fibre to the Xyratex Array Slung underneath with about 7TB raw SAS storage. Without the concentrator and its particular flavour of OS (some custom linux derivitive) providing the iSCSI target, getting access to the VM on the array was going to get really boring. I needed to see if I could fix the RAID controller – quickly.

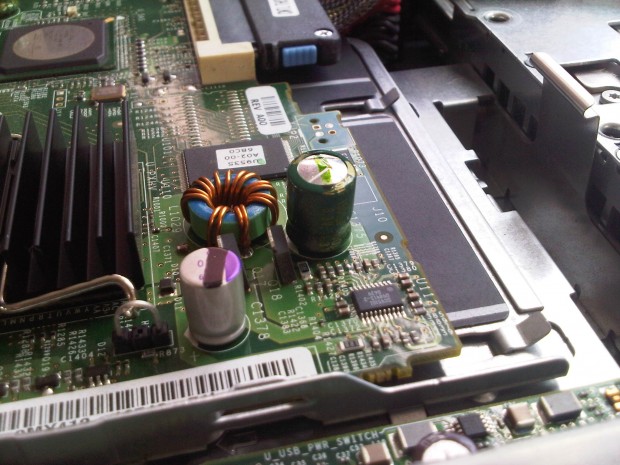

Luckily, once I had gotten the RAID controller out I could immediately see a blown capacitor on the board – have a look.

As you can see the capacitor is clearly expanded and produced a domed top. With fingers crossed I decided to whip it off and slap in a replacement to see what would happen.30mins later, leaving a trail of PCs behind me as I went round the office trying to find a spare motherboard with a matching 6.3V 1500μF capacitor, I was gently soldering on the replacement.

I slapped the controller back in and powered the concentrator back up and tada! OS boots and I can get access to the VM (which was very rapidly Storage vMotioned the hell out of there).

It looks like this capacitor issue is something that has happened a lot. Found this post where others with Dell Poweredge servers have found swollen capacitors…

For Reference the fault detail was E171F PCIE Fatal Err B0 D3 F0.

No “after” photo?! I’m about to try this, too. I wanna have a better idea what I’m up against.

Sadly not. As soon as I had soldered it on I rushed to power it up to see if it worked – thankfully it did 😉 Good luck with yours – I’m sure it’ll be fine!

The really sad part is quality caps to replace the bad ones are only around .65 cents US each.